Part 1: PCA Autoencoder¶

Introduction¶

An interesting work by Ladjal et al on autoencoders has been brought to my attention by one of my colleagues, as we are trying to leverage the nonlinearity of autoencoders to better decompose complex turbulent reacting flows. As stated in the abstract, the main goal was to improve the interpretability of autoencoder by (1) constructing the latent space of the autoencoder with independent components that are (2) ordered by decreasing importance to the data (both are automatically achieved with a linear Principal Component Analysis). To achieve (1), the authors proposed to minimize the covariance of the latent codes alongside the standard loss function. The covariance term can be written as (based on Eq.1 in the paper):

where \(M\) is the batch size, \(z_i\) the code in the latent space. The authors introduced two modifications to a conventional CNN-based autoencoder to implement this loss function:

First, a BatchNormalization layer before the latent space (such that the second term above becomes 0)

Second, add the rest of the covariance term to the total loss

I will attempt to reproduce some of the key results presented in the paper in a two-part series using TensorFlow. In this notebook, I will focus on implementing the new loss function, utilizing the .add_loss() method in custom layers in TensorFlow. In the second part, I will look at how to achieve a hiearchichal latent space with the iterations the authors described. I will refer to the architecture as PCA autoencoder, a term given by the authors in their paper.

Synthesize dataset¶

In this notebook, I will just try to recreate the set of binary ellipses with only two variables (two variables) and leave out the rotation for the time being.

Setup¶

from functools import partial

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow import keras

np.random.seed(42)

tf.random.set_seed(42)

Phantom binary ellipses¶

I will use the same image dimension (64, 64) as stated in the paper. With a batch size of 500, I chose a sample size of 8000 to get 16 minibatches per epoch (it seems the total sample size was not provided in the paper, unless I missed it).

def phantomEllipse(n, a, b):

x = np.arange(n)

R = n // 2

y = x[:, np.newaxis]

img = (x-R)**2/a**2 + (y-R)**2/b**2

img[img<=1] = 1

img[img>1] = 0

return img

n = 64

num_batch = 16

batch_size = 500

N = int(num_batch * batch_size)

random_gen = np.random.default_rng()

a = random_gen.uniform(1, n//2, N)

b = random_gen.uniform(1, n//2, N)

dataset = np.array([phantomEllipse(n, _a, _b) for _a, _b in zip(a, b)])

dataset = dataset[..., np.newaxis] # pay attention to the shape of the dataset!

Let’s look at a sample of 8 images from the dataset:

frames = np.random.choice(np.arange(N), 8)

_, ax = plt.subplots(1, 8, figsize=(12, 3))

for i in range(8):

ax[i].imshow(dataset[frames[i], ..., 0], cmap=plt.get_cmap('gray'))

ax[i].axis("off")

plt.show()

As can be seen, the ellipses have essentially two degrees of freedom (horizontal and vertical axes). Ideally, a latent space containing two codes in the autoencoder should suffice to capture these two main features in the dataset.

PCA Autoencoder¶

I will not strictly follow model structure presented in the paper. I will adopt a more conventional “pyramid” architecture with increasing filters in the encoder hidden layers (and vice versa for the decoder). The goal here is not to optimize the autoencoder to minimize the losses. The focus is rather on the functional differences between autoencoder and PCA encoder introduced by the authors.

Covariance loss¶

To implement the additional covariance loss term, a custom layer will be created for utilizing the .add_loss() method built into the standard keras.layers.Layer.

class LatentCovarianceLayer(keras.layers.Layer):

def __init__(self, lam=0.1, **kwargs):

super().__init__(**kwargs)

self.lam = lam

def call(self, inputs):

covariance = self.lam * tf.math.reduce_mean(tf.math.reduce_prod(inputs, 1))

self.add_loss(tf.math.abs(covariance))

return inputs

def get_config(self):

base_config = super().get_config()

return {**base_config, "lam":self.lam,}

Enconder¶

To neutralize the second term in the covariance loss (see above), a non-trainable BatchNormalization layer needs to be added prior to the latent space. The training of \(\gamma\) and \(\beta\) will therefore be disabled by setting both keyword arguments scale and center to False.

encoder = keras.models.Sequential([

keras.layers.Conv2D(4, (3, 3), padding='same', input_shape=[64, 64, 1]),

keras.layers.LeakyReLU(),

keras.layers.MaxPool2D((2, 2)),

keras.layers.Conv2D(8, (3, 3), padding='same'),

keras.layers.LeakyReLU(),

keras.layers.MaxPool2D((2, 2)),

keras.layers.Conv2D(16, (3, 3), padding='same'),

keras.layers.LeakyReLU(),

keras.layers.MaxPool2D((2, 2)),

keras.layers.Conv2D(32, (3, 3), padding='same'),

keras.layers.LeakyReLU(),

keras.layers.MaxPool2D((2, 2)),

keras.layers.Conv2D(64, (3, 3), padding='same'),

keras.layers.LeakyReLU(),

keras.layers.MaxPool2D((2, 2)),

keras.layers.Flatten(),

keras.layers.BatchNormalization(scale=False, center=False),

keras.layers.Dense(2),

keras.layers.LeakyReLU(),

LatentCovarianceLayer(0.1)

])

encoder.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 64, 64, 4) 40

_________________________________________________________________

leaky_re_lu (LeakyReLU) (None, 64, 64, 4) 0

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 32, 32, 4) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 32, 32, 8) 296

_________________________________________________________________

leaky_re_lu_1 (LeakyReLU) (None, 32, 32, 8) 0

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 16, 16, 8) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 16, 16, 16) 1168

_________________________________________________________________

leaky_re_lu_2 (LeakyReLU) (None, 16, 16, 16) 0

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 8, 8, 16) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 8, 8, 32) 4640

_________________________________________________________________

leaky_re_lu_3 (LeakyReLU) (None, 8, 8, 32) 0

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 4, 4, 32) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 4, 4, 64) 18496

_________________________________________________________________

leaky_re_lu_4 (LeakyReLU) (None, 4, 4, 64) 0

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 (None, 2, 2, 64) 0

_________________________________________________________________

flatten (Flatten) (None, 256) 0

_________________________________________________________________

batch_normalization (BatchNo (None, 256) 512

_________________________________________________________________

dense (Dense) (None, 2) 514

_________________________________________________________________

leaky_re_lu_5 (LeakyReLU) (None, 2) 0

_________________________________________________________________

latent_covariance_layer (Lat (None, 2) 0

=================================================================

Total params: 25,666

Trainable params: 25,154

Non-trainable params: 512

_________________________________________________________________

Decoder¶

Instead of a combinationation of Conv2D and upsampling layers, I will just use Conv2DTranspose to simplify the decoder.

decoder = keras.models.Sequential([

keras.layers.Dense(16, input_shape=[2]),

keras.layers.LeakyReLU(),

keras.layers.Reshape((2, 2, 4)),

keras.layers.Conv2DTranspose(32, (3, 3), strides=2, padding='same'),

keras.layers.LeakyReLU(),

keras.layers.Conv2DTranspose(16, (3, 3), strides=2, padding='same'),

keras.layers.LeakyReLU(),

keras.layers.Conv2DTranspose(8, (3, 3), strides=2, padding='same'),

keras.layers.LeakyReLU(),

keras.layers.Conv2DTranspose(4, (3, 3), strides=2, padding='same'),

keras.layers.LeakyReLU(),

keras.layers.Conv2DTranspose(1, (3, 3), strides=2, padding='same'),

])

decoder.summary()

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_1 (Dense) (None, 16) 48

_________________________________________________________________

leaky_re_lu_6 (LeakyReLU) (None, 16) 0

_________________________________________________________________

reshape (Reshape) (None, 2, 2, 4) 0

_________________________________________________________________

conv2d_transpose (Conv2DTran (None, 4, 4, 32) 1184

_________________________________________________________________

leaky_re_lu_7 (LeakyReLU) (None, 4, 4, 32) 0

_________________________________________________________________

conv2d_transpose_1 (Conv2DTr (None, 8, 8, 16) 4624

_________________________________________________________________

leaky_re_lu_8 (LeakyReLU) (None, 8, 8, 16) 0

_________________________________________________________________

conv2d_transpose_2 (Conv2DTr (None, 16, 16, 8) 1160

_________________________________________________________________

leaky_re_lu_9 (LeakyReLU) (None, 16, 16, 8) 0

_________________________________________________________________

conv2d_transpose_3 (Conv2DTr (None, 32, 32, 4) 292

_________________________________________________________________

leaky_re_lu_10 (LeakyReLU) (None, 32, 32, 4) 0

_________________________________________________________________

conv2d_transpose_4 (Conv2DTr (None, 64, 64, 1) 37

=================================================================

Total params: 7,345

Trainable params: 7,345

Non-trainable params: 0

_________________________________________________________________

Compile and train¶

# SCROLL

keras.backend.clear_session()

optimizer = tf.keras.optimizers.Adam(learning_rate=0.002)

pca_ae = keras.models.Sequential([encoder, decoder])

pca_ae.compile(optimizer=optimizer, loss='mse')

tempfn='./model_pca_ae.hdf5'

model_cb=keras.callbacks.ModelCheckpoint(tempfn, monitor='loss',save_best_only=True, verbose=1)

early_cb=keras.callbacks.EarlyStopping(monitor='loss', patience=50, verbose=1)

learning_rate_reduction = keras.callbacks.ReduceLROnPlateau(monitor='loss',

patience=25,

verbose=1,

factor=0.5,

min_lr=0.00001)

cb = [model_cb, early_cb, learning_rate_reduction]

history=pca_ae.fit(dataset, dataset,

epochs=1000,

batch_size=500,

shuffle=True,

callbacks=cb)

Epoch 1/1000

16/16 [==============================] - 4s 29ms/step - loss: 0.2136

Epoch 00001: loss improved from inf to 0.21358, saving model to ./model_pca_ae.hdf5

Epoch 2/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.1815

Epoch 00002: loss improved from 0.21358 to 0.18147, saving model to ./model_pca_ae.hdf5

Epoch 3/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.1620

Epoch 00003: loss improved from 0.18147 to 0.16198, saving model to ./model_pca_ae.hdf5

Epoch 4/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.1137

Epoch 00004: loss improved from 0.16198 to 0.11374, saving model to ./model_pca_ae.hdf5

Epoch 5/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0751

Epoch 00005: loss improved from 0.11374 to 0.07507, saving model to ./model_pca_ae.hdf5

Epoch 6/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0592

Epoch 00006: loss improved from 0.07507 to 0.05918, saving model to ./model_pca_ae.hdf5

Epoch 7/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0520

Epoch 00007: loss improved from 0.05918 to 0.05198, saving model to ./model_pca_ae.hdf5

Epoch 8/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0465

Epoch 00008: loss improved from 0.05198 to 0.04655, saving model to ./model_pca_ae.hdf5

Epoch 9/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0434

Epoch 00009: loss improved from 0.04655 to 0.04344, saving model to ./model_pca_ae.hdf5

Epoch 10/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0405

Epoch 00010: loss improved from 0.04344 to 0.04046, saving model to ./model_pca_ae.hdf5

Epoch 11/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0389

Epoch 00011: loss improved from 0.04046 to 0.03890, saving model to ./model_pca_ae.hdf5

Epoch 12/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0368

Epoch 00012: loss improved from 0.03890 to 0.03684, saving model to ./model_pca_ae.hdf5

Epoch 13/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0352

Epoch 00013: loss improved from 0.03684 to 0.03524, saving model to ./model_pca_ae.hdf5

Epoch 14/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0340

Epoch 00014: loss improved from 0.03524 to 0.03400, saving model to ./model_pca_ae.hdf5

Epoch 15/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0332

Epoch 00015: loss improved from 0.03400 to 0.03320, saving model to ./model_pca_ae.hdf5

Epoch 16/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0319

Epoch 00016: loss improved from 0.03320 to 0.03191, saving model to ./model_pca_ae.hdf5

Epoch 17/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0309

Epoch 00017: loss improved from 0.03191 to 0.03089, saving model to ./model_pca_ae.hdf5

Epoch 18/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0303

Epoch 00018: loss improved from 0.03089 to 0.03033, saving model to ./model_pca_ae.hdf5

Epoch 19/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0294

Epoch 00019: loss improved from 0.03033 to 0.02943, saving model to ./model_pca_ae.hdf5

Epoch 20/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0290

Epoch 00020: loss improved from 0.02943 to 0.02901, saving model to ./model_pca_ae.hdf5

Epoch 21/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0276

Epoch 00021: loss improved from 0.02901 to 0.02757, saving model to ./model_pca_ae.hdf5

Epoch 22/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0278

Epoch 00022: loss did not improve from 0.02757

Epoch 23/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0265

Epoch 00023: loss improved from 0.02757 to 0.02654, saving model to ./model_pca_ae.hdf5

Epoch 24/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0259

Epoch 00024: loss improved from 0.02654 to 0.02591, saving model to ./model_pca_ae.hdf5

Epoch 25/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0255

Epoch 00025: loss improved from 0.02591 to 0.02547, saving model to ./model_pca_ae.hdf5

Epoch 26/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0259

Epoch 00026: loss did not improve from 0.02547

Epoch 27/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0244

Epoch 00027: loss improved from 0.02547 to 0.02436, saving model to ./model_pca_ae.hdf5

Epoch 28/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0235

Epoch 00028: loss improved from 0.02436 to 0.02351, saving model to ./model_pca_ae.hdf5

Epoch 29/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0222

Epoch 00029: loss improved from 0.02351 to 0.02222, saving model to ./model_pca_ae.hdf5

Epoch 30/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0217

Epoch 00030: loss improved from 0.02222 to 0.02170, saving model to ./model_pca_ae.hdf5

Epoch 31/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0213

Epoch 00031: loss improved from 0.02170 to 0.02127, saving model to ./model_pca_ae.hdf5

Epoch 32/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0205

Epoch 00032: loss improved from 0.02127 to 0.02055, saving model to ./model_pca_ae.hdf5

Epoch 33/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0194

Epoch 00033: loss improved from 0.02055 to 0.01944, saving model to ./model_pca_ae.hdf5

Epoch 34/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0198

Epoch 00034: loss did not improve from 0.01944

Epoch 35/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0184

Epoch 00035: loss improved from 0.01944 to 0.01840, saving model to ./model_pca_ae.hdf5

Epoch 36/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0181

Epoch 00036: loss improved from 0.01840 to 0.01807, saving model to ./model_pca_ae.hdf5

Epoch 37/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0187

Epoch 00037: loss did not improve from 0.01807

Epoch 38/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0174

Epoch 00038: loss improved from 0.01807 to 0.01741, saving model to ./model_pca_ae.hdf5

Epoch 39/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0181

Epoch 00039: loss did not improve from 0.01741

Epoch 40/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0183

Epoch 00040: loss did not improve from 0.01741

Epoch 41/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0163

Epoch 00041: loss improved from 0.01741 to 0.01625, saving model to ./model_pca_ae.hdf5

Epoch 42/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0169

Epoch 00042: loss did not improve from 0.01625

Epoch 43/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0158

Epoch 00043: loss improved from 0.01625 to 0.01575, saving model to ./model_pca_ae.hdf5

Epoch 44/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0156

Epoch 00044: loss improved from 0.01575 to 0.01561, saving model to ./model_pca_ae.hdf5

Epoch 45/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0159

Epoch 00045: loss did not improve from 0.01561

Epoch 46/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0172

Epoch 00046: loss did not improve from 0.01561

Epoch 47/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0157

Epoch 00047: loss did not improve from 0.01561

Epoch 48/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0162

Epoch 00048: loss did not improve from 0.01561

Epoch 49/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0158

Epoch 00049: loss did not improve from 0.01561

Epoch 50/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0156

Epoch 00050: loss improved from 0.01561 to 0.01559, saving model to ./model_pca_ae.hdf5

Epoch 51/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0158

Epoch 00051: loss did not improve from 0.01559

Epoch 52/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0146

Epoch 00052: loss improved from 0.01559 to 0.01464, saving model to ./model_pca_ae.hdf5

Epoch 53/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0155

Epoch 00053: loss did not improve from 0.01464

Epoch 54/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0152

Epoch 00054: loss did not improve from 0.01464

Epoch 55/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0153

Epoch 00055: loss did not improve from 0.01464

Epoch 56/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0168

Epoch 00056: loss did not improve from 0.01464

Epoch 57/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0149

Epoch 00057: loss did not improve from 0.01464

Epoch 58/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0145

Epoch 00058: loss improved from 0.01464 to 0.01452, saving model to ./model_pca_ae.hdf5

Epoch 59/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0139

Epoch 00059: loss improved from 0.01452 to 0.01391, saving model to ./model_pca_ae.hdf5

Epoch 60/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0152

Epoch 00060: loss did not improve from 0.01391

Epoch 61/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0152

Epoch 00061: loss did not improve from 0.01391

Epoch 62/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0138

Epoch 00062: loss improved from 0.01391 to 0.01379, saving model to ./model_pca_ae.hdf5

Epoch 63/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0142

Epoch 00063: loss did not improve from 0.01379

Epoch 64/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0142

Epoch 00064: loss did not improve from 0.01379

Epoch 65/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0139

Epoch 00065: loss did not improve from 0.01379

Epoch 66/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0143

Epoch 00066: loss did not improve from 0.01379

Epoch 67/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0134

Epoch 00067: loss improved from 0.01379 to 0.01341, saving model to ./model_pca_ae.hdf5

Epoch 68/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0141

Epoch 00068: loss did not improve from 0.01341

Epoch 69/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0151

Epoch 00069: loss did not improve from 0.01341

Epoch 70/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0138

Epoch 00070: loss did not improve from 0.01341

Epoch 71/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0143

Epoch 00071: loss did not improve from 0.01341

Epoch 72/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0136

Epoch 00072: loss did not improve from 0.01341

Epoch 73/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0131

Epoch 00073: loss improved from 0.01341 to 0.01310, saving model to ./model_pca_ae.hdf5

Epoch 74/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0142

Epoch 00074: loss did not improve from 0.01310

Epoch 75/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0134

Epoch 00075: loss did not improve from 0.01310

Epoch 76/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0138

Epoch 00076: loss did not improve from 0.01310

Epoch 77/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0145

Epoch 00077: loss did not improve from 0.01310

Epoch 78/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0132

Epoch 00078: loss did not improve from 0.01310

Epoch 79/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0127

Epoch 00079: loss improved from 0.01310 to 0.01267, saving model to ./model_pca_ae.hdf5

Epoch 80/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0140

Epoch 00080: loss did not improve from 0.01267

Epoch 81/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0142

Epoch 00081: loss did not improve from 0.01267

Epoch 82/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0140

Epoch 00082: loss did not improve from 0.01267

Epoch 83/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0141

Epoch 00083: loss did not improve from 0.01267

Epoch 84/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0136

Epoch 00084: loss did not improve from 0.01267

Epoch 85/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0129

Epoch 00085: loss did not improve from 0.01267

Epoch 86/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0130

Epoch 00086: loss did not improve from 0.01267

Epoch 87/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0119

Epoch 00087: loss improved from 0.01267 to 0.01190, saving model to ./model_pca_ae.hdf5

Epoch 88/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0127

Epoch 00088: loss did not improve from 0.01190

Epoch 89/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0125

Epoch 00089: loss did not improve from 0.01190

Epoch 90/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0120

Epoch 00090: loss did not improve from 0.01190

Epoch 91/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0126

Epoch 00091: loss did not improve from 0.01190

Epoch 92/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0119

Epoch 00092: loss did not improve from 0.01190

Epoch 93/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0149

Epoch 00093: loss did not improve from 0.01190

Epoch 94/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0130

Epoch 00094: loss did not improve from 0.01190

Epoch 95/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0127

Epoch 00095: loss did not improve from 0.01190

Epoch 96/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0130

Epoch 00096: loss did not improve from 0.01190

Epoch 97/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0123

Epoch 00097: loss did not improve from 0.01190

Epoch 98/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0125

Epoch 00098: loss did not improve from 0.01190

Epoch 99/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0123

Epoch 00099: loss did not improve from 0.01190

Epoch 100/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0139

Epoch 00100: loss did not improve from 0.01190

Epoch 101/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0136

Epoch 00101: loss did not improve from 0.01190

Epoch 102/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0122

Epoch 00102: loss did not improve from 0.01190

Epoch 103/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0121

Epoch 00103: loss did not improve from 0.01190

Epoch 104/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0120

Epoch 00104: loss did not improve from 0.01190

Epoch 105/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0122

Epoch 00105: loss did not improve from 0.01190

Epoch 106/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0120

Epoch 00106: loss did not improve from 0.01190

Epoch 107/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0121

Epoch 00107: loss did not improve from 0.01190

Epoch 108/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0114

Epoch 00108: loss improved from 0.01190 to 0.01136, saving model to ./model_pca_ae.hdf5

Epoch 109/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0115

Epoch 00109: loss did not improve from 0.01136

Epoch 110/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0113

Epoch 00110: loss improved from 0.01136 to 0.01126, saving model to ./model_pca_ae.hdf5

Epoch 111/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0114

Epoch 00111: loss did not improve from 0.01126

Epoch 112/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0140

Epoch 00112: loss did not improve from 0.01126

Epoch 113/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0125

Epoch 00113: loss did not improve from 0.01126

Epoch 114/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0128

Epoch 00114: loss did not improve from 0.01126

Epoch 115/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0125

Epoch 00115: loss did not improve from 0.01126

Epoch 116/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0121

Epoch 00116: loss did not improve from 0.01126

Epoch 117/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0121

Epoch 00117: loss did not improve from 0.01126

Epoch 118/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0123

Epoch 00118: loss did not improve from 0.01126

Epoch 119/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0118

Epoch 00119: loss did not improve from 0.01126

Epoch 120/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0112

Epoch 00120: loss improved from 0.01126 to 0.01125, saving model to ./model_pca_ae.hdf5

Epoch 121/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0121

Epoch 00121: loss did not improve from 0.01125

Epoch 122/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0123

Epoch 00122: loss did not improve from 0.01125

Epoch 123/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0133

Epoch 00123: loss did not improve from 0.01125

Epoch 124/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0114

Epoch 00124: loss did not improve from 0.01125

Epoch 125/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0111

Epoch 00125: loss improved from 0.01125 to 0.01114, saving model to ./model_pca_ae.hdf5

Epoch 126/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0110

Epoch 00126: loss improved from 0.01114 to 0.01102, saving model to ./model_pca_ae.hdf5

Epoch 127/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0129

Epoch 00127: loss did not improve from 0.01102

Epoch 128/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0126

Epoch 00128: loss did not improve from 0.01102

Epoch 129/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0120

Epoch 00129: loss did not improve from 0.01102

Epoch 130/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0118

Epoch 00130: loss did not improve from 0.01102

Epoch 131/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0111

Epoch 00131: loss did not improve from 0.01102

Epoch 132/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0115

Epoch 00132: loss did not improve from 0.01102

Epoch 133/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0116

Epoch 00133: loss did not improve from 0.01102

Epoch 134/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0118

Epoch 00134: loss did not improve from 0.01102

Epoch 135/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0110

Epoch 00135: loss improved from 0.01102 to 0.01098, saving model to ./model_pca_ae.hdf5

Epoch 136/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0106

Epoch 00136: loss improved from 0.01098 to 0.01063, saving model to ./model_pca_ae.hdf5

Epoch 137/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0109

Epoch 00137: loss did not improve from 0.01063

Epoch 138/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0120

Epoch 00138: loss did not improve from 0.01063

Epoch 139/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0127

Epoch 00139: loss did not improve from 0.01063

Epoch 140/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0116

Epoch 00140: loss did not improve from 0.01063

Epoch 141/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0118

Epoch 00141: loss did not improve from 0.01063

Epoch 142/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0109

Epoch 00142: loss did not improve from 0.01063

Epoch 143/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0109

Epoch 00143: loss did not improve from 0.01063

Epoch 144/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0114

Epoch 00144: loss did not improve from 0.01063

Epoch 145/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0118

Epoch 00145: loss did not improve from 0.01063

Epoch 146/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0113

Epoch 00146: loss did not improve from 0.01063

Epoch 147/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0116

Epoch 00147: loss did not improve from 0.01063

Epoch 148/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0121

Epoch 00148: loss did not improve from 0.01063

Epoch 149/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0111

Epoch 00149: loss did not improve from 0.01063

Epoch 150/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0116

Epoch 00150: loss did not improve from 0.01063

Epoch 151/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0107

Epoch 00151: loss did not improve from 0.01063

Epoch 152/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0121

Epoch 00152: loss did not improve from 0.01063

Epoch 153/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0117

Epoch 00153: loss did not improve from 0.01063

Epoch 154/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0117

Epoch 00154: loss did not improve from 0.01063

Epoch 155/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0110

Epoch 00155: loss did not improve from 0.01063

Epoch 156/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0102

Epoch 00156: loss improved from 0.01063 to 0.01019, saving model to ./model_pca_ae.hdf5

Epoch 157/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0106

Epoch 00157: loss did not improve from 0.01019

Epoch 158/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0107

Epoch 00158: loss did not improve from 0.01019

Epoch 159/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0116

Epoch 00159: loss did not improve from 0.01019

Epoch 160/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0124

Epoch 00160: loss did not improve from 0.01019

Epoch 161/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0122

Epoch 00161: loss did not improve from 0.01019

Epoch 162/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0110

Epoch 00162: loss did not improve from 0.01019

Epoch 163/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0119

Epoch 00163: loss did not improve from 0.01019

Epoch 164/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0106

Epoch 00164: loss did not improve from 0.01019

Epoch 165/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0106

Epoch 00165: loss did not improve from 0.01019

Epoch 166/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0117

Epoch 00166: loss did not improve from 0.01019

Epoch 167/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0107

Epoch 00167: loss did not improve from 0.01019

Epoch 168/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0112

Epoch 00168: loss did not improve from 0.01019

Epoch 169/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0106

Epoch 00169: loss did not improve from 0.01019

Epoch 170/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0104

Epoch 00170: loss did not improve from 0.01019

Epoch 171/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0112

Epoch 00171: loss did not improve from 0.01019

Epoch 172/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0105

Epoch 00172: loss did not improve from 0.01019

Epoch 173/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0107

Epoch 00173: loss did not improve from 0.01019

Epoch 174/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0110

Epoch 00174: loss did not improve from 0.01019

Epoch 175/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0109

Epoch 00175: loss did not improve from 0.01019

Epoch 176/1000

16/16 [==============================] - 0s 28ms/step - loss: 0.0101

Epoch 00176: loss improved from 0.01019 to 0.01013, saving model to ./model_pca_ae.hdf5

Epoch 177/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0112

Epoch 00177: loss did not improve from 0.01013

Epoch 178/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0109

Epoch 00178: loss did not improve from 0.01013

Epoch 179/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0117

Epoch 00179: loss did not improve from 0.01013

Epoch 180/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0113

Epoch 00180: loss did not improve from 0.01013

Epoch 181/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0107

Epoch 00181: loss did not improve from 0.01013

Epoch 00181: ReduceLROnPlateau reducing learning rate to 0.0010000000474974513.

Epoch 182/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0103

Epoch 00182: loss did not improve from 0.01013

Epoch 183/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0111

Epoch 00183: loss did not improve from 0.01013

Epoch 184/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0103

Epoch 00184: loss did not improve from 0.01013

Epoch 185/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0099

Epoch 00185: loss improved from 0.01013 to 0.00986, saving model to ./model_pca_ae.hdf5

Epoch 186/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0102

Epoch 00186: loss did not improve from 0.00986

Epoch 187/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0099

Epoch 00187: loss did not improve from 0.00986

Epoch 188/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0103

Epoch 00188: loss did not improve from 0.00986

Epoch 189/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0104

Epoch 00189: loss did not improve from 0.00986

Epoch 190/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0100

Epoch 00190: loss did not improve from 0.00986

Epoch 191/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0103

Epoch 00191: loss did not improve from 0.00986

Epoch 192/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0099

Epoch 00192: loss did not improve from 0.00986

Epoch 193/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0101

Epoch 00193: loss did not improve from 0.00986

Epoch 194/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0100

Epoch 00194: loss did not improve from 0.00986

Epoch 195/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0103

Epoch 00195: loss did not improve from 0.00986

Epoch 196/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0104

Epoch 00196: loss did not improve from 0.00986

Epoch 197/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0102

Epoch 00197: loss did not improve from 0.00986

Epoch 198/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0100

Epoch 00198: loss did not improve from 0.00986

Epoch 199/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0104

Epoch 00199: loss did not improve from 0.00986

Epoch 200/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0112

Epoch 00200: loss did not improve from 0.00986

Epoch 201/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0102

Epoch 00201: loss did not improve from 0.00986

Epoch 202/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0096

Epoch 00202: loss improved from 0.00986 to 0.00965, saving model to ./model_pca_ae.hdf5

Epoch 203/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0106

Epoch 00203: loss did not improve from 0.00965

Epoch 204/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0111

Epoch 00204: loss did not improve from 0.00965

Epoch 205/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0105

Epoch 00205: loss did not improve from 0.00965

Epoch 206/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0103

Epoch 00206: loss did not improve from 0.00965

Epoch 207/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00207: loss improved from 0.00965 to 0.00923, saving model to ./model_pca_ae.hdf5

Epoch 208/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0101

Epoch 00208: loss did not improve from 0.00923

Epoch 209/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0107

Epoch 00209: loss did not improve from 0.00923

Epoch 210/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0111

Epoch 00210: loss did not improve from 0.00923

Epoch 211/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0104

Epoch 00211: loss did not improve from 0.00923

Epoch 212/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0103

Epoch 00212: loss did not improve from 0.00923

Epoch 213/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0099

Epoch 00213: loss did not improve from 0.00923

Epoch 214/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0096

Epoch 00214: loss did not improve from 0.00923

Epoch 215/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0098

Epoch 00215: loss did not improve from 0.00923

Epoch 216/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0097

Epoch 00216: loss did not improve from 0.00923

Epoch 217/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0100

Epoch 00217: loss did not improve from 0.00923

Epoch 218/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0104

Epoch 00218: loss did not improve from 0.00923

Epoch 219/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0099

Epoch 00219: loss did not improve from 0.00923

Epoch 220/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0099

Epoch 00220: loss did not improve from 0.00923

Epoch 221/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0105

Epoch 00221: loss did not improve from 0.00923

Epoch 222/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0098

Epoch 00222: loss did not improve from 0.00923

Epoch 223/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0104

Epoch 00223: loss did not improve from 0.00923

Epoch 224/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0104

Epoch 00224: loss did not improve from 0.00923

Epoch 225/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0105

Epoch 00225: loss did not improve from 0.00923

Epoch 226/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0096

Epoch 00226: loss did not improve from 0.00923

Epoch 227/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0097

Epoch 00227: loss did not improve from 0.00923

Epoch 228/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0098

Epoch 00228: loss did not improve from 0.00923

Epoch 229/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0097

Epoch 00229: loss did not improve from 0.00923

Epoch 230/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00230: loss did not improve from 0.00923

Epoch 231/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0094

Epoch 00231: loss did not improve from 0.00923

Epoch 232/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00232: loss did not improve from 0.00923

Epoch 00232: ReduceLROnPlateau reducing learning rate to 0.0005000000237487257.

Epoch 233/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00233: loss improved from 0.00923 to 0.00916, saving model to ./model_pca_ae.hdf5

Epoch 234/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0100

Epoch 00234: loss did not improve from 0.00916

Epoch 235/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0097

Epoch 00235: loss did not improve from 0.00916

Epoch 236/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0095

Epoch 00236: loss did not improve from 0.00916

Epoch 237/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0096

Epoch 00237: loss did not improve from 0.00916

Epoch 238/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0092

Epoch 00238: loss did not improve from 0.00916

Epoch 239/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0101

Epoch 00239: loss did not improve from 0.00916

Epoch 240/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0096

Epoch 00240: loss did not improve from 0.00916

Epoch 241/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0094

Epoch 00241: loss did not improve from 0.00916

Epoch 242/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0100

Epoch 00242: loss did not improve from 0.00916

Epoch 243/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0097

Epoch 00243: loss did not improve from 0.00916

Epoch 244/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0092

Epoch 00244: loss did not improve from 0.00916

Epoch 245/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0096

Epoch 00245: loss did not improve from 0.00916

Epoch 246/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0094

Epoch 00246: loss did not improve from 0.00916

Epoch 247/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0096

Epoch 00247: loss did not improve from 0.00916

Epoch 248/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00248: loss did not improve from 0.00916

Epoch 249/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00249: loss did not improve from 0.00916

Epoch 250/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0096

Epoch 00250: loss did not improve from 0.00916

Epoch 251/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0103

Epoch 00251: loss did not improve from 0.00916

Epoch 252/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0097

Epoch 00252: loss did not improve from 0.00916

Epoch 253/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0094

Epoch 00253: loss did not improve from 0.00916

Epoch 254/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00254: loss improved from 0.00916 to 0.00907, saving model to ./model_pca_ae.hdf5

Epoch 255/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0098

Epoch 00255: loss did not improve from 0.00907

Epoch 256/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0102

Epoch 00256: loss did not improve from 0.00907

Epoch 257/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00257: loss did not improve from 0.00907

Epoch 258/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0108

Epoch 00258: loss did not improve from 0.00907

Epoch 259/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0097

Epoch 00259: loss did not improve from 0.00907

Epoch 260/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00260: loss improved from 0.00907 to 0.00901, saving model to ./model_pca_ae.hdf5

Epoch 261/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00261: loss did not improve from 0.00901

Epoch 262/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00262: loss did not improve from 0.00901

Epoch 263/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0097

Epoch 00263: loss did not improve from 0.00901

Epoch 264/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00264: loss did not improve from 0.00901

Epoch 265/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00265: loss did not improve from 0.00901

Epoch 266/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00266: loss did not improve from 0.00901

Epoch 267/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0094

Epoch 00267: loss did not improve from 0.00901

Epoch 268/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0096

Epoch 00268: loss did not improve from 0.00901

Epoch 269/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00269: loss did not improve from 0.00901

Epoch 270/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00270: loss did not improve from 0.00901

Epoch 271/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0093

Epoch 00271: loss did not improve from 0.00901

Epoch 272/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00272: loss did not improve from 0.00901

Epoch 273/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0101

Epoch 00273: loss did not improve from 0.00901

Epoch 274/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0095

Epoch 00274: loss did not improve from 0.00901

Epoch 275/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0090

Epoch 00275: loss improved from 0.00901 to 0.00899, saving model to ./model_pca_ae.hdf5

Epoch 276/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0094

Epoch 00276: loss did not improve from 0.00899

Epoch 277/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0101

Epoch 00277: loss did not improve from 0.00899

Epoch 278/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0102

Epoch 00278: loss did not improve from 0.00899

Epoch 279/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0094

Epoch 00279: loss did not improve from 0.00899

Epoch 00279: ReduceLROnPlateau reducing learning rate to 0.0002500000118743628.

Epoch 280/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0092

Epoch 00280: loss did not improve from 0.00899

Epoch 281/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0098

Epoch 00281: loss did not improve from 0.00899

Epoch 282/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00282: loss did not improve from 0.00899

Epoch 283/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0098

Epoch 00283: loss did not improve from 0.00899

Epoch 284/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00284: loss did not improve from 0.00899

Epoch 285/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0093

Epoch 00285: loss did not improve from 0.00899

Epoch 286/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00286: loss did not improve from 0.00899

Epoch 287/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00287: loss did not improve from 0.00899

Epoch 288/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0094

Epoch 00288: loss did not improve from 0.00899

Epoch 289/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00289: loss did not improve from 0.00899

Epoch 290/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00290: loss did not improve from 0.00899

Epoch 291/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0093

Epoch 00291: loss did not improve from 0.00899

Epoch 292/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0090

Epoch 00292: loss did not improve from 0.00899

Epoch 293/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0094

Epoch 00293: loss did not improve from 0.00899

Epoch 294/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0094

Epoch 00294: loss did not improve from 0.00899

Epoch 295/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0096

Epoch 00295: loss did not improve from 0.00899

Epoch 296/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0088

Epoch 00296: loss improved from 0.00899 to 0.00877, saving model to ./model_pca_ae.hdf5

Epoch 297/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0092

Epoch 00297: loss did not improve from 0.00877

Epoch 298/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0093

Epoch 00298: loss did not improve from 0.00877

Epoch 299/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00299: loss did not improve from 0.00877

Epoch 300/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0099

Epoch 00300: loss did not improve from 0.00877

Epoch 301/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00301: loss did not improve from 0.00877

Epoch 302/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0093

Epoch 00302: loss did not improve from 0.00877

Epoch 303/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00303: loss did not improve from 0.00877

Epoch 304/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0103

Epoch 00304: loss did not improve from 0.00877

Epoch 305/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00305: loss did not improve from 0.00877

Epoch 306/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0092

Epoch 00306: loss did not improve from 0.00877

Epoch 307/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0090

Epoch 00307: loss did not improve from 0.00877

Epoch 308/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00308: loss did not improve from 0.00877

Epoch 309/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0097

Epoch 00309: loss did not improve from 0.00877

Epoch 310/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00310: loss did not improve from 0.00877

Epoch 311/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0089

Epoch 00311: loss did not improve from 0.00877

Epoch 312/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0094

Epoch 00312: loss did not improve from 0.00877

Epoch 313/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00313: loss did not improve from 0.00877

Epoch 314/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00314: loss did not improve from 0.00877

Epoch 315/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00315: loss did not improve from 0.00877

Epoch 316/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00316: loss did not improve from 0.00877

Epoch 317/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0100

Epoch 00317: loss did not improve from 0.00877

Epoch 318/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00318: loss did not improve from 0.00877

Epoch 319/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0094

Epoch 00319: loss did not improve from 0.00877

Epoch 320/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00320: loss did not improve from 0.00877

Epoch 321/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0093

Epoch 00321: loss did not improve from 0.00877

Epoch 00321: ReduceLROnPlateau reducing learning rate to 0.0001250000059371814.

Epoch 322/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00322: loss did not improve from 0.00877

Epoch 323/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00323: loss did not improve from 0.00877

Epoch 324/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00324: loss did not improve from 0.00877

Epoch 325/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00325: loss did not improve from 0.00877

Epoch 326/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0094

Epoch 00326: loss did not improve from 0.00877

Epoch 327/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0098

Epoch 00327: loss did not improve from 0.00877

Epoch 328/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00328: loss did not improve from 0.00877

Epoch 329/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0097

Epoch 00329: loss did not improve from 0.00877

Epoch 330/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0099

Epoch 00330: loss did not improve from 0.00877

Epoch 331/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0086

Epoch 00331: loss improved from 0.00877 to 0.00862, saving model to ./model_pca_ae.hdf5

Epoch 332/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0094

Epoch 00332: loss did not improve from 0.00862

Epoch 333/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0086

Epoch 00333: loss improved from 0.00862 to 0.00861, saving model to ./model_pca_ae.hdf5

Epoch 334/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0090

Epoch 00334: loss did not improve from 0.00861

Epoch 335/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0096

Epoch 00335: loss did not improve from 0.00861

Epoch 336/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0090

Epoch 00336: loss did not improve from 0.00861

Epoch 337/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0092

Epoch 00337: loss did not improve from 0.00861

Epoch 338/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00338: loss did not improve from 0.00861

Epoch 339/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0093

Epoch 00339: loss did not improve from 0.00861

Epoch 340/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0092

Epoch 00340: loss did not improve from 0.00861

Epoch 341/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0087

Epoch 00341: loss did not improve from 0.00861

Epoch 342/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0087

Epoch 00342: loss did not improve from 0.00861

Epoch 343/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00343: loss did not improve from 0.00861

Epoch 344/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00344: loss did not improve from 0.00861

Epoch 345/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0088

Epoch 00345: loss did not improve from 0.00861

Epoch 346/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0089

Epoch 00346: loss did not improve from 0.00861

Epoch 347/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00347: loss did not improve from 0.00861

Epoch 348/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00348: loss did not improve from 0.00861

Epoch 349/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0089

Epoch 00349: loss did not improve from 0.00861

Epoch 350/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00350: loss did not improve from 0.00861

Epoch 351/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00351: loss did not improve from 0.00861

Epoch 352/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00352: loss did not improve from 0.00861

Epoch 353/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0088

Epoch 00353: loss did not improve from 0.00861

Epoch 354/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0086

Epoch 00354: loss improved from 0.00861 to 0.00857, saving model to ./model_pca_ae.hdf5

Epoch 355/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0088

Epoch 00355: loss did not improve from 0.00857

Epoch 356/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0090

Epoch 00356: loss did not improve from 0.00857

Epoch 00356: ReduceLROnPlateau reducing learning rate to 6.25000029685907e-05.

Epoch 357/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0093

Epoch 00357: loss did not improve from 0.00857

Epoch 358/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00358: loss did not improve from 0.00857

Epoch 359/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00359: loss did not improve from 0.00857

Epoch 360/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00360: loss did not improve from 0.00857

Epoch 361/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0088

Epoch 00361: loss did not improve from 0.00857

Epoch 362/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00362: loss did not improve from 0.00857

Epoch 363/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00363: loss did not improve from 0.00857

Epoch 364/1000

16/16 [==============================] - 1s 32ms/step - loss: 0.0099

Epoch 00364: loss did not improve from 0.00857

Epoch 365/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0088

Epoch 00365: loss did not improve from 0.00857

Epoch 366/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00366: loss did not improve from 0.00857

Epoch 367/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0092

Epoch 00367: loss did not improve from 0.00857

Epoch 368/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00368: loss did not improve from 0.00857

Epoch 369/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0092

Epoch 00369: loss did not improve from 0.00857

Epoch 370/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0089

Epoch 00370: loss did not improve from 0.00857

Epoch 371/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00371: loss did not improve from 0.00857

Epoch 372/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00372: loss did not improve from 0.00857

Epoch 373/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00373: loss did not improve from 0.00857

Epoch 374/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0089

Epoch 00374: loss did not improve from 0.00857

Epoch 375/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0089

Epoch 00375: loss did not improve from 0.00857

Epoch 376/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0089

Epoch 00376: loss did not improve from 0.00857

Epoch 377/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00377: loss did not improve from 0.00857

Epoch 378/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0095

Epoch 00378: loss did not improve from 0.00857

Epoch 379/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0090

Epoch 00379: loss did not improve from 0.00857

Epoch 380/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0092

Epoch 00380: loss did not improve from 0.00857

Epoch 381/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00381: loss did not improve from 0.00857

Epoch 00381: ReduceLROnPlateau reducing learning rate to 3.125000148429535e-05.

Epoch 382/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00382: loss did not improve from 0.00857

Epoch 383/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0088

Epoch 00383: loss did not improve from 0.00857

Epoch 384/1000

16/16 [==============================] - 1s 31ms/step - loss: 0.0090

Epoch 00384: loss did not improve from 0.00857

Epoch 385/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0088

Epoch 00385: loss did not improve from 0.00857

Epoch 386/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00386: loss did not improve from 0.00857

Epoch 387/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00387: loss did not improve from 0.00857

Epoch 388/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00388: loss did not improve from 0.00857

Epoch 389/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00389: loss did not improve from 0.00857

Epoch 390/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0089

Epoch 00390: loss did not improve from 0.00857

Epoch 391/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0093

Epoch 00391: loss did not improve from 0.00857

Epoch 392/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00392: loss did not improve from 0.00857

Epoch 393/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0091

Epoch 00393: loss did not improve from 0.00857

Epoch 394/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0092

Epoch 00394: loss did not improve from 0.00857

Epoch 395/1000

16/16 [==============================] - 0s 29ms/step - loss: 0.0093

Epoch 00395: loss did not improve from 0.00857

Epoch 396/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0086

Epoch 00396: loss did not improve from 0.00857

Epoch 397/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0089

Epoch 00397: loss did not improve from 0.00857

Epoch 398/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00398: loss did not improve from 0.00857

Epoch 399/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0092

Epoch 00399: loss did not improve from 0.00857

Epoch 400/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0087

Epoch 00400: loss did not improve from 0.00857

Epoch 401/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0088

Epoch 00401: loss did not improve from 0.00857

Epoch 402/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0088

Epoch 00402: loss did not improve from 0.00857

Epoch 403/1000

16/16 [==============================] - 0s 30ms/step - loss: 0.0090

Epoch 00403: loss did not improve from 0.00857

Epoch 404/1000

16/16 [==============================] - 0s 31ms/step - loss: 0.0091

Epoch 00404: loss did not improve from 0.00857

Epoch 00404: early stopping

Examine the results¶

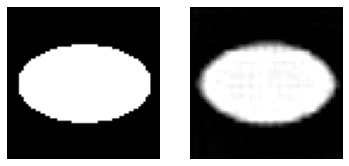

Let’s load the best model and test it on a frame first.

pca_ae_model = keras.models.load_model('model_pca_ae.hdf5', custom_objects={"LatentCovarianceLayer": LatentCovarianceLayer})

img = dataset[1430, ...]

img_rec = pca_ae_model.predict(img[np.newaxis,...])

_, ax = plt.subplots(1, 2)

ax[0].imshow(img[...,0], cmap=plt.get_cmap('gray'), vmin=0, vmax=1)

ax[0].axis('off')

ax[1].imshow(img_rec[0,...,0], cmap=plt.get_cmap('gray'), vmin=0, vmax=1)

ax[1].axis('off')

plt.show()

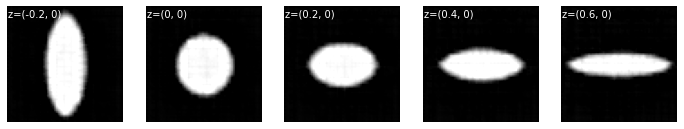

Alright, the model seems to work as it is supposed to. Due to limited latent codes and the fact that the binary nature of the datasets has not been enforced during training (such as via sigmoid activation and/or binary cross entropy), the autoencoder couldn’t perfectly reproduce the original image. The compression is likely too lossy. Let’s now try to look at the interdepencies of the two codes in the latent space (Fig.2 in the original paper), which the authors referred to as the “interpolation” by fixing either one of the coordinates to 0. We can do that by extracting the decoder from the model:

pca_ae_decoder = pca_ae_model.layers[1]

and then supply different coordinates to it:

vals = [-0.2, 0, 0.2, 0.4, 0.6]

_, ax = plt.subplots(1, len(vals), figsize=(12, 3))

for i in range(len(vals)):

img_dec = pca_ae_decoder.predict([[vals[i], 0]])

ax[i].imshow(img_dec[0,...,0], cmap=plt.get_cmap('gray'), vmin=0, vmax=1)

ax[i].axis("off")

ax[i].text(0, 5, f"z=({vals[i]}, 0)", c='w')

plt.show()

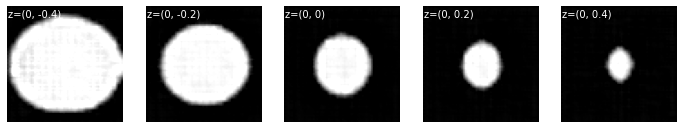

vals = [-0.4, -0.2, 0, 0.2, 0.4]

_, ax = plt.subplots(1, len(vals), figsize=(12, 3))

for i in range(len(vals)):

img_dec = pca_ae_decoder.predict([[0, vals[i]]])

ax[i].imshow(img_dec[0,...,0], cmap=plt.get_cmap('gray'), vmin=0, vmax=1)

ax[i].axis("off")

ax[i].text(0, 5, f"z=(0, {vals[i]})", c='w')

plt.show()

It appears that, even without the iterative hierarchical model the authors adopted, the two codes in the latent space have attained distinct roles in representing the input image: with the first code controlling the axis and the second controlling the size of the ellipses.

Remarks¶

It should be pointed out that I tried a variety of models with different constellations of hidden layers and activation functions (such as ReLU, UpSampling2D). Although the above model doesn’t generate the most accurate input image, it produces the most consistent latent space that contains relatively independent codes.

I also tried to use just the custom layer to compute the entire covariance loss, instead of relying on the BatchNormalization layer to set the batch average to 0. However, it seems this separate approach as adopted by the authors consistently produced the best results (desired latent codes) during my tests and also ran faster. It is possible that the BatchNormalization layer serves more purposes (such as controlling the gradient) than intended that could have benefited the training.

CNN Autoencoder¶

For comparison, we will have a look at a conventional autoencoder without the custom layer to account for latent covariance. I will keep all the other layers the same (including the BatchNormalization to ensure similar values in the latent space).

# SCROLL

encoder = keras.models.Sequential([

keras.layers.Conv2D(4, (3, 3), padding='same', input_shape=[64, 64, 1]),

keras.layers.LeakyReLU(),

keras.layers.MaxPool2D((2, 2)),

keras.layers.Conv2D(8, (3, 3), padding='same'),

keras.layers.LeakyReLU(),

keras.layers.MaxPool2D((2, 2)),

keras.layers.Conv2D(16, (3, 3), padding='same'),

keras.layers.LeakyReLU(),

keras.layers.MaxPool2D((2, 2)),

keras.layers.Conv2D(32, (3, 3), padding='same'),

keras.layers.LeakyReLU(),

keras.layers.MaxPool2D((2, 2)),

keras.layers.Conv2D(64, (3, 3), padding='same'),

keras.layers.LeakyReLU(),

keras.layers.MaxPool2D((2, 2)),

keras.layers.Flatten(),

keras.layers.BatchNormalization(scale=False, center=False),

keras.layers.Dense(2),

keras.layers.LeakyReLU(),

])